On Twitter, Paul Romer lauds the job that Janet Yellen has done, writing that "In an extraordinarily difficult political context, J. Yellen did an extraordinarily important public policy job extraordinarily well."

However, I've long been a skeptic of the job that both Ben Bernanke and Janet Yellen have done. (Seems I'm the only one who remembers that 2010 discount rate hike, with GDP 20% below trend, she voted for, which helped spawn the Tea Party...) The economy never had a full recovery, as growth is still slow and inflation is still below target. I once explained this to a colleague, and she told me that "Sorry, but I don't think you are smarter than Ben Bernanke. He knows more about monetary policy than you." When I was at the CEA, virtually everyone else there thought I was the stupid one, and that Ben-"When Growth is Not Enough"-Bernanke's policy was roughly optimal.

Of course, this is long before Ben Bernanke himself amended his views, to say that central banks should do price-level targeting when exiting a liquidity trap. I.e., Ben Bernanke (2017) thinks the Fed should have aimed for higher inflation in the 2009 to 2014 period, whereas Ben Bernanke (2009-2014) seemed to be content with below-target inflation, much less inflation over and above the inflation target. That Bernanke (and Yellen) also believed that when growth and inflation were below forecast, a central bank should not provide more stimulus, but instead lower the forecasts.

However, Bernanke's reappraisal isn't just a repudiation of the views of the 2009 to 2014 Ben Bernanke, but also a repudiation of the views of Janet Yellen over this period. Of course, Janet has only been the Chair since 2014. Since that time, she has seen fit to end QE and raise interest rates repeatedly. So, let's do some Monday-morning quarterbacking on how well this has gone.

In terms of the Core PCE deflator, inflation has been below the Fed's own target under Yellen's entire term, and is currently nowhere near the target. In addition, there's a good case to be made that the Fed's inflation target is, itself, too low. And then there is Bernanke's argument, that, coming out of a liquidity trap period, central banks should aim for temporarily high inflation. Yellen's record here is not good.

How about with GDP growth and employment? If all is well there, slightly lower inflation would not be a real problem. However, here is Real GDP relative to the long-run trend.

Note that the US is well below it's long-run growth trend, and getting further away from it. The near-consensus among economists, interestingly enough, is that most everything that can be invented has already been invented, and there just isn't that much "stuff" left. Yes, really. I think that is nonsense (the iphone was invented in 2007!). In fact the cause is the China (+RER) shock, and then poor regulation during the housing bubble, and poor monetary policy managing the liquidity trap. This is all fairly obvious by now. Strange it isn't already the consensus. But slow inflation along with slow growth suggests that the problem isn't some structural supply problem, but due to a shortfall of demand.

However, to be fair to Paul Romer, unemployment is way down. This is a good sign, and an indicator that the labor market has improved.

However, it is not the only labor market indicator, and thus, by itself, does not provide a full picture of the economy. The employment rate for prime-aged workers is another legitimate measure to look at, as the unemployment rate might look good if many people have simply left the labor force. And, the prime-age employment rate below shows that, while the US has made steady progress, it is not quite back to the level it was at in 2007. In addition, the 2007 peak, which came after 7 years of relatively slow GDP growth despite a housing bubble, and was also the decade of the collapse in manufacturing employment, was significantly lower than the 2000 peak. (The overall employment-to-population ratio still looks terrible.)

However, even this measure is flawed in several respects. One problem is that, given heavy baby boomer retirement, more jobs have opened up for younger workers than would otherwise be the case. While I don't think an adjustment for this would change the picture that much, we might actually deduct a quarter to a half of a percent for this.

A second factor is that the several decades since the 1970s had saw increasing numbers of women enter the labor force. Optimists may say that this trend was simply complete by 2000. But, even since then, we have seen female employment continue to increase on a relative basis. Thus, I would say that our baseline shouldn't be the 2000 peak, but that we should have expected emp-to-pop to have increased more than this. How much more? Perhaps another .25 or .5%. A good paper could probably tease this out. Again, I don't think this necessitates a large adjustment. But, these are starting to add up, and means we might still be 3.5-4% below where we should be.

The graph of the female prime-aged employment rate shows that female employment has essentially recovered back to its level in 2007. This means that it is still gaining ground relative to male employment, even since 2000. And, it is still about 7% lower than the overall employment rate.

A third factor is that just because people are working, it doesn't mean they are doing work they are happy with, or have seen the wage growth they would like. Here is the part-time employment rate, which is still elevated, and presents a rather pessimistic figure. But, if more people are working part-time, this is an indication that other people who are working full-time are not employed in their ideal jobs, but would rather have better jobs with higher salaries. And, of course, given the slower GDP growth, incomes have not grown as fast as they used to.

Obviously, incomes are also not increasing as fast as they used to.

Sure, inflation is also low, but GDP growth is slow. That both are slow is an indication that the economy is demand constrained. Why is it demand constrained? Well, the end of QE and four rate hikes are certainly part of the story. Those rate hikes caused the dollar to appreciate, inflation to subside, and more manufacturing jobs to be lost. And this is Janet Yellen's doing. This wasn't a one-time mistake either. She repeatedly failed to hit her inflation target, with slow GDP, and never re-thought the course the Fed was on.

The defence of Yellen (and also of Bernanke) is that she might have liked to have been more accommodative over much of this period, but also had to deal with more conservative elements on the Board (see here).

To get a sense of how competent the people around Yellen at the Fed have been, read this stream of jaw-dropping quotes, stolen from a commenter here on Scott Sumner's excellent blog:

The 2008 “Dream Team”

SEPTEMBER 16, 2008 FOMC TRANSCRIPT

SELECTED QUOTES EXCERPTED FROM ROUNDTABLE DISCUSSION

MR DUDLEY

Either the financial system is going to implode in a major way, which will lead to a significant further easing, or it is not.

MR LOCKHART

But I should follow the philosophy of Charlie Brown, who I think said, “Never do today what you can put off until tomorrow.” [Laughter]

MR ROSENGREN

Deleveraging is likely to occur with a vengeance as firms seek to survive this period of significant upheaval… I support alternative A to reduce the fed funds rate 25 basis points. Thank you.

Mr HOENIG.

I also encourage us to look beyond the immediate crisis, which I recognize is serious. But as pointed out here, we also have an inflation issue. Our core inflation is still above where it should be.

MS YELLEN. I agree with the Greenbook’s assessment that the strength we saw in the upwardly revised real GDP growth in the second quarter will not hold up. Despite the tax rebates, real personal consumption expenditures declined in both June

and July, and retail sales were down in August. My contacts report that cutbacks in spending are widespread, especially for discretionary items. For example, East Bay plastic surgeons and dentists note that patients are deferring elective procedures. [Laughter]

MR BULLARD

Meanwhile, an inflation problem is brewing. The headline CPI inflation rate, the one consumers actually face, is about 6¼ percent year-to-date…My policy preference is to maintain the federal funds rate target at the current level and to wait for some time to assess the impact of the Lehman bankruptcy filing, if any, on the national economy.

MR PLOSSER

As I said, it is my view that the current stance of policy is inconsistent with price stability in the intermediate term and so rates ultimately will have to rise.

MR STERN

Given the lags in policy, it doesn’t seem that there is a heck of a lot we can do about current circumstances, and we have already tried to address the financial turmoil. So I would favor alternative B as a policy matter. As far as language is concerned with regard to B, I would be inclined to give more prominence to financial issues. I think you could do that maybe by reversing the first two sentences in paragraph 2. You would have to change the transitions, of

course.

MR. EVANS

But I think we should be seen as making well-calculated moves with the funds rate, and the current uncertainty is so large that I don’t feel as though we have enough information to make such calculations today.

MS PIANALTO

Given the events of the weekend, I still think it is appropriate for us to keep our policy rate unchanged. I would like more time to assess how the recent events are going to affect the real economy. I have a small preference for the assessment-of-risk language under alternative A.

MR LACKER

In fact, it’s heartening that compensation growth is coming in a little below expected in response to the energy price shock this year. This has allowed us to accomplish the inevitable decline in real wages without setting off an inflationary acceleration in wage rates.

MR. HOENIG

I think what we did with Lehman was the right thing because we did have a market beginning to play the Treasury and us, and that has some pretty negative consequences as well, which we are now coming to grips with.

MR. ROSENGREN

I think it’s too soon to know whether what we did with Lehman is right. Given that the Treasury didn’t want to put money in, what happened was that we had no choice…I hope we get through this week. But I think it’s far from clear, and we were taking a bet, and I hope in the future we don’t have to be in situations where we’re taking bets.

Mr. FISHER. All of that reminds me—forgive me for quoting Bob Dylan—but money doesn’t talk; it swears. When you swear, you get emotional. If you blaspheme, you lose control. I think the main thing we must do in this policy decision today is not to lose control, to show a steady hand. I would recommend, Mr. Chairman, that we embrace unanimously—and I think it’s important for us to be unanimous at this moment—alternative B

MR WARSH.

Those would be my suggestions to try to strike that balance—that we are keenly focused on what’s going on, but until we have a better view of its implications, we are not going to act.

The optimistic view of Yellen is that, while overall policy was quite inappropriately tight for essentially the entire period since 2008, Yellen may have been struggling all the time against these clowns in private, leading policy on a less-bad course. It seems this was partly true, but this case remains to be made, however, as I see no evidence that she wasn't in favor of the rate hikes as Fed Chair. She also could have talked Obama into making timely appointments in 2009 and 2010, to try to get policy back on track. Instead, she voted for a rate hike in 2010. I viewed this as unforgivable at the time, and it even looks worse in retrospect.

Of course, she also got unlucky. Had it not been for Comey, her mistakes as Fed Chair would likely not have led to Donald Trump.

Note: follow me on twitter @TradeandMoney

A blog about Manufacturing, International Trade, Monetary Policy, and replication by an academic economist.

Monday, December 11, 2017

Wednesday, November 29, 2017

An Economist's Take on Bitcoin and Cryptocurrencies: It's a Giant Scam and the Mother of All Bubbles

Bitcoin has climbed over $10,000 (when I first drafted this post last week, it was at just $8,200). The cryptocurrency market now appears like a full stock market of fake stocks, with a market cap of $245 billion (update a week later: $345 billion), more than 1% of the capitalization of the US stock market. My take on bitcoin is the standard boring economists' take: bitcoin and other cryptocurrencies are the mother of all irrational bubbles. The South Seas Bubble, Tulip Mania, the Nifty Fifty, and the dot.com bubble were all similar. And, if you'd listened to me (and us economists), you're continuing to live in relative poverty as your friends get rich, with money and wealth coming out of nowhere and millionaires minted overnight.

Theoretically, some other problems with bitcoin is that there is free entry. Anyone can create an infinite amount of cryptocurrency out of thin air. The marginal cost is zero. The saving grace is that there are network effects -- a currency becomes more valuable the more people that use it, and so it will be tough for other cryptocurrencies to displace bitcoin. However, that can't explain why there are thousands of cryptocurrencies with huge market caps. Only 1-2 of these will ultimately be the victor, and bitcoin is likely to be one of them.

Another issue with bitcoin/cryptocurrencies long-term is that if they ever did replace actual currencies in everyday transactions, governments could really lose out. The Federal Reserve would lose control over monetary policy, for example, and to the extent cryptocurrencies enable drug smugglers and hackers and others to evade the authorities and paying taxes, this should be something which governments will have a real interest in illegalizing. Thus, there is no endgame where bitcoin replaces the US dollar, the Chinese Yuan, or the Euro as the primary currency of a major economy. It is simply too volatile, and there will be nothing to stabilize its value.

The real economic argument for bitcoin is not that it actually provides cheap transaction fees, but rather that it is a really good scam/meme. It's techy, it's complicated, and few people understand it. Those who spent the time to learn how it really works then become part of the cult and evangelize over it. It could be compared to the spread of a religion: If many people very fervently buy into it, it could be a bubble that lasts a long time. This is the optimistic case for bitcoin. There are a group of Japanese in Brazil who went to their graves believing that Japan didn't lose WWII, and it was just US propaganda that suggested otherwise. The bitcoin true believers/dead-enders may hold bitcoin until the day they die, giving it a positive value for a long time to come.

Or, it could be more like the spread of a disease (I'm stealing this from Robert Shiller). To grow, the disease needs a lot of new people to infect. Once about 20-30% of the people are infected, it's growth will be at a maximum. But, over time, there are fewer and fewer new people to infect, as most people have had the disease, and the rate of new infections crashes. Bitcoin may not be so different -- the early adopters buy in, sending the price up. The higher price means more news, and is a positive feedback loop as the mainstreamers start to buy. Doubt creeps into the minds of naysayers, who might have believed it to be a scam initially, but now see the price soaring, against their predictions.

Usually the moment to sell is after almost everyone who is a quick adopter has already adopted, the median person has too, and the moment at which people who are typically late adopters start to invest. At that point, the economy will run out of suckers, and the price will start to stagnate and fall. Legend has it that Joe Kennedy sold his stocks in 1929 after a shoeshine boy started giving him stock tips. An older family member of mine was day-trading tech stocks in the 1990s, and then bought a condo in Florida in 2006. This person is my bellwether.

Given this may be a reason to buy in the near term, before the late adopters get wind (and, damnit!, why didn't I realize early on that this was a good scam!), be warned that just as the positive feedback loop works well on the way up, and it can work in reverse on the way down. A few bad days, and panic selling can ensue. Once it crashes, a generation of people could be so turned off by crypto they'll never touch it again.

What crypto does is settle the debate over whether fundamentals drive stock prices and exchange rates. I gave a talk at LSE a few weeks ago on my research on exchange rates and manufacturing, and someone stated their belief that exchange rates are driven by fundamentals (monetary policy) and so it was monetary policy which drove my results and not exchange rates, per se. However, as we see with bitcoin, which isn't driven by any kind of fundamental economic value, as it pays no dividends and has high transaction fees, bubbles can happen and markets aren't that efficient. (OK, even if you believe in bitcoin, how much do you believe in Sexcoin, Dogecoin, or "Byteball bites", the latter of which has a market cap of a cool $187 million...) There is never going to be a day when everyday people use "byteball bites" to buy groceries.

It also shows another reason why governments might want to tax windfall profits or large capital gains at a higher rate. Those profiting from cryptocurrency are incredibly lucky. Their "investments" don't leave any reason to deserve favorable income treatment relative to wage income. Stock market earnings are similar. Luck is involved just as much as skill.

Lastly, though, let me state my agreement with others that government-sponsored electronic currencies are probably a thing of the near future. If an electronic currency allows every transaction (or most transactions) to be traced by the government, it can cut down on illegal activity, narcotics, and tax evasion. A government could really very easily broaden the tax base, and raise more revenue while cutting taxes on law abiding citizens. This will probably help developing countries (like Russia) where tax evasion is rampant the most. I guess this will happen soon. Greece should do this and leave the Euro system (but not the EU!). Obviously, a digital currency also solves the problem of the zero lower bound on interest rates, reason enough to do it. Were I the Autocrat of All the Russians, I'd have implemented this already.

In any case, I don't want to give anyone investment advice. I have no clue what will happen to the price of bitcoin, although that should be a warning. I hope none of my friends miss out on the huge boom as bitcoin goes from $10,000 to $100,000 just because they read this. Just be for-warned that what goes up must come down. If you do ride the wave up, think about taking something off the table and try to remain diversified. (That goes for the US stock market too, which also now looks quite overvalued...) Once your parents start to buy bitcoin, that's probably a good time to cash out.

|

| Pictured here with Nobel Prize Winner Robert Shiller, fortunate to experience a balmy -7 degrees in Moscow. He also believes that bitcoin is in a huge bubble. |

Despite my view that this is a standard bubble, I tried to buy bitcoin last summer (back at the bargain price of $4,000...), in part because I wanted to see how easy it was to use bitcoin to send money back to the US from Russia. After all, the logic behind bitcoin is that it is a super easy, cheap and fast way to send money. Exactly what I needed. The difficulty I went through in trying to purchase bitcoin only confirmed my worst fears of why I think it is a scam/ponzi scheme. Part of the problems I faced were no doubt specific to me, as a US national living in Russia. Many bitcoin exchanges are country specific, and didn't like my Russian IP address. Others did, but required a lot of information, including a picture of my with an ID, and also a picture of me with a bank statement with my home address (in the US) written on it. I ended up never getting approved, and never got a straight answer from some of these exchanges on why not. Probably, they are just minting money so fast why should they invest in customer support.

But all the information required, even if I had been approved immediately, kind of shoots down some of the logic. If I'm a drug-dealer looking to accept payment in bitcoin, I'm still going to have to provide a lot of information to the exchanges. And, while my troubles may have been unique, bitcoin isn't that easy to use. Your grandma isn't going to be buying groceries or trading bitcoin anytime soon. Indicative of the inconvenience of buying bitcoin, there is a closed-end investment fund which traded on the stock-market that owns only bitcoin, and was recently trading at twice the par value of bitcoin (see Figure below). That is, people who wanted to buy bitcoin in their brokerage accounts were too lazy to cash out their accounts and buy bitcoin directly, so they paid double the price to avoid the hassle.

In addition, the fees associated with buying bitcoins in Russia using rubles, sending them to myself in the US, and then converting them back into dollars are at least an order of magnitude larger than just buying dollars using my currency broker, and then sending money to myself directly. The total cost of my normal fees for doing this set of transactions run about $25 for a $10,000 transaction using the banking system and my currency broker. By contrast, I'm told the bitcoin broker in Russia charges 3%, and one in the US (Coinbase) charges 2% per transaction (maybe this is now 1.49% for Coinbase users in the US, although it looks as though they charge 4% to fund an account using Visa/Mastercard), plus whatever the bitcoin miners charge (perhaps .2%?). Even the miner's fees are calculated in a super non-transparent way. It's probably that way for a reason.

Theoretically, some other problems with bitcoin is that there is free entry. Anyone can create an infinite amount of cryptocurrency out of thin air. The marginal cost is zero. The saving grace is that there are network effects -- a currency becomes more valuable the more people that use it, and so it will be tough for other cryptocurrencies to displace bitcoin. However, that can't explain why there are thousands of cryptocurrencies with huge market caps. Only 1-2 of these will ultimately be the victor, and bitcoin is likely to be one of them.

Another issue with bitcoin/cryptocurrencies long-term is that if they ever did replace actual currencies in everyday transactions, governments could really lose out. The Federal Reserve would lose control over monetary policy, for example, and to the extent cryptocurrencies enable drug smugglers and hackers and others to evade the authorities and paying taxes, this should be something which governments will have a real interest in illegalizing. Thus, there is no endgame where bitcoin replaces the US dollar, the Chinese Yuan, or the Euro as the primary currency of a major economy. It is simply too volatile, and there will be nothing to stabilize its value.

The real economic argument for bitcoin is not that it actually provides cheap transaction fees, but rather that it is a really good scam/meme. It's techy, it's complicated, and few people understand it. Those who spent the time to learn how it really works then become part of the cult and evangelize over it. It could be compared to the spread of a religion: If many people very fervently buy into it, it could be a bubble that lasts a long time. This is the optimistic case for bitcoin. There are a group of Japanese in Brazil who went to their graves believing that Japan didn't lose WWII, and it was just US propaganda that suggested otherwise. The bitcoin true believers/dead-enders may hold bitcoin until the day they die, giving it a positive value for a long time to come.

Or, it could be more like the spread of a disease (I'm stealing this from Robert Shiller). To grow, the disease needs a lot of new people to infect. Once about 20-30% of the people are infected, it's growth will be at a maximum. But, over time, there are fewer and fewer new people to infect, as most people have had the disease, and the rate of new infections crashes. Bitcoin may not be so different -- the early adopters buy in, sending the price up. The higher price means more news, and is a positive feedback loop as the mainstreamers start to buy. Doubt creeps into the minds of naysayers, who might have believed it to be a scam initially, but now see the price soaring, against their predictions.

Usually the moment to sell is after almost everyone who is a quick adopter has already adopted, the median person has too, and the moment at which people who are typically late adopters start to invest. At that point, the economy will run out of suckers, and the price will start to stagnate and fall. Legend has it that Joe Kennedy sold his stocks in 1929 after a shoeshine boy started giving him stock tips. An older family member of mine was day-trading tech stocks in the 1990s, and then bought a condo in Florida in 2006. This person is my bellwether.

Given this may be a reason to buy in the near term, before the late adopters get wind (and, damnit!, why didn't I realize early on that this was a good scam!), be warned that just as the positive feedback loop works well on the way up, and it can work in reverse on the way down. A few bad days, and panic selling can ensue. Once it crashes, a generation of people could be so turned off by crypto they'll never touch it again.

What crypto does is settle the debate over whether fundamentals drive stock prices and exchange rates. I gave a talk at LSE a few weeks ago on my research on exchange rates and manufacturing, and someone stated their belief that exchange rates are driven by fundamentals (monetary policy) and so it was monetary policy which drove my results and not exchange rates, per se. However, as we see with bitcoin, which isn't driven by any kind of fundamental economic value, as it pays no dividends and has high transaction fees, bubbles can happen and markets aren't that efficient. (OK, even if you believe in bitcoin, how much do you believe in Sexcoin, Dogecoin, or "Byteball bites", the latter of which has a market cap of a cool $187 million...) There is never going to be a day when everyday people use "byteball bites" to buy groceries.

It also shows another reason why governments might want to tax windfall profits or large capital gains at a higher rate. Those profiting from cryptocurrency are incredibly lucky. Their "investments" don't leave any reason to deserve favorable income treatment relative to wage income. Stock market earnings are similar. Luck is involved just as much as skill.

Lastly, though, let me state my agreement with others that government-sponsored electronic currencies are probably a thing of the near future. If an electronic currency allows every transaction (or most transactions) to be traced by the government, it can cut down on illegal activity, narcotics, and tax evasion. A government could really very easily broaden the tax base, and raise more revenue while cutting taxes on law abiding citizens. This will probably help developing countries (like Russia) where tax evasion is rampant the most. I guess this will happen soon. Greece should do this and leave the Euro system (but not the EU!). Obviously, a digital currency also solves the problem of the zero lower bound on interest rates, reason enough to do it. Were I the Autocrat of All the Russians, I'd have implemented this already.

In any case, I don't want to give anyone investment advice. I have no clue what will happen to the price of bitcoin, although that should be a warning. I hope none of my friends miss out on the huge boom as bitcoin goes from $10,000 to $100,000 just because they read this. Just be for-warned that what goes up must come down. If you do ride the wave up, think about taking something off the table and try to remain diversified. (That goes for the US stock market too, which also now looks quite overvalued...) Once your parents start to buy bitcoin, that's probably a good time to cash out.

Sunday, November 19, 2017

A comment on "The Long-term Decline in US Manufacturing" by Jeff Frankel

Jeff

Frankel guest posts over at Econbrowser about "The Long-Term Decline in US Manufacturing"

The theme is a familiar one on this blog. He posts some interesting data, and I agree with him about the determinants of the long-run decline. He shares my "favorite" graph:

However, I also had a few other thoughts:

(1)

If you extrapolate forward on the US Man. Emp. as a share of total, it becomes

negative within several decades. Obviously, it can’t be negative, and thus the

slope needed to flatten out at some point. Instead, in the early 2000s, it

looks to have been below trend. By contrast, the pace of decline for

agriculture did flatten out. But, since there is no clear counterfactual, I’m

not sure this series tells us that much, in the end. Another problem is that

the sudden loss of 3 million manufacturing jobs may have been part of the reason

for the slow growth in overall employment after 2000, which is the view of Ben

Bernanke. If Detroit loses 80% of its tradable-sector jobs, and as a result,

80% of its retail and government jobs, then it’s share of tradable-sector jobs

wouldn’t change. Then we could conclude that the loss of manufacturing jobs is

not what hurt Detroit.

(2)

I think it would be good to separate factors affecting the long-run decline as

a share of employment, which is mostly fast technological growth and to a

lesser extent sectoral shift toward services, and factors affecting the level

of manufacturing employment in the early 2000s. The recent decline in the level, which happened alongside the trade deficits, is probably what the public is more concerned with. Trade really does seem to be a

dominant factor in the collapse of the level in that period. And the shock does

seem large enough to have had macro effects and helped push the economy into a

liquidity trap, as Ben Bernanke seems to think.

(3)

If you do another international comparison, between US manufacturing output as

a share of world output, things don’t look as good. Especially vis-a-vis countries

like Germany and Korea, much less China.

I

agree with Jeff that protectionism now isn’t the right policy solution.

However, US-China relative prices have been set in Beijing for the last 30

years, with China having a clearly undervalued exchange rate during much of

that period. Why wouldn’t we have been better off with a free trade regime,

with prices set by market forces? Also, if you believe prices matter, why

wouldn’t this undervaluation have caused a decline in tradable sector jobs over

this period? And, after a long period of the US being overvalued, why do you

think the US tradable sector wouldn’t be smaller than it would have been

otherwise?

I

discussed these issues on this blog previously, and on my own blog:

http://douglaslcampbell.blogspot.ru/2017/05/is-us-manufacturing-really-great-again.htmlLastly, Brad Setser links to an interesting NYT article on the new rise in China's protected automotive industry. It's a great reminder how the US and China do not have free trade in practice, but also to the extent which the Chinese government has internalized Hamiltonian infant-industry protection.

Thursday, November 16, 2017

Robert Lawrence's New Manufacturing Paper: Confusing Trends and Levels

Perhaps Lawrence's new paper isn't quite as bad as I imagined when I first saw this on twitter, at least with some interesting data, although there isn't much there, as the "analysis" is done by simple plots of aggregate data. My expectations for him were quite low, though, and so I still have plenty of points of contention.

The main issue is that the central argument is against a straw man. He toggles back and forth seamlessly between two related, but different issues with different causes. One is the long-run decline of manufacturing employment as a share of total employment. This long-run decline is, in fact, caused by faster productivity growth so in that he is correct. However, as far as I know, no economists actually dispute this. And when Trump cites China for the declining in US manufacturing, he is talking about the level, not this "as a share of GDP" bullshit. The rather sudden decline in the level of manufacturing employment in the early 2000s is a different issue than the long-run decline in the share. This was caused mostly by trade, and also by slow demand growth (which isn't necessarily unrelated to the trade shock), but it was not caused by productivity growth. At first, it's unclear which of the two Lawrence is talking about, and given that he says many people believe trade is the cause, it makes it sound as though he is talking about the 2nd. However, then he switches back to arguing the first point.

He then plots this figure, which is meant to show that China didn't cause a deviation from the US's long-run trend in its share of US manufacturing employment.

However, as I've discussed before on this blog, I don't think one can infer much about the health of the manufacturing sector based on whether manufacturing employment/total employment is above or below its long-run trend. The reason is that the long-run trend implies negative employment within several decades (see above), which can't happen, so naturally it must flatten out at some point, precisely like agriculture has done. But, there is no clear counterfactual for what the share of manufacturing employment "should" be. Secondly, the slow growth in overall employment after 2000 was likely caused in part by the swift loss of 3 million manufacturing jobs (see my previous post, and here). Detroit lost many of its tradable sector jobs, but then also lost lots of retail and government jobs as revenues dried up. Its share of manufacturing jobs could also well be on its long-run trend. One could then conclude, erroneously, that the loss of tradable-sector jobs in Detroit is not what caused its decline.

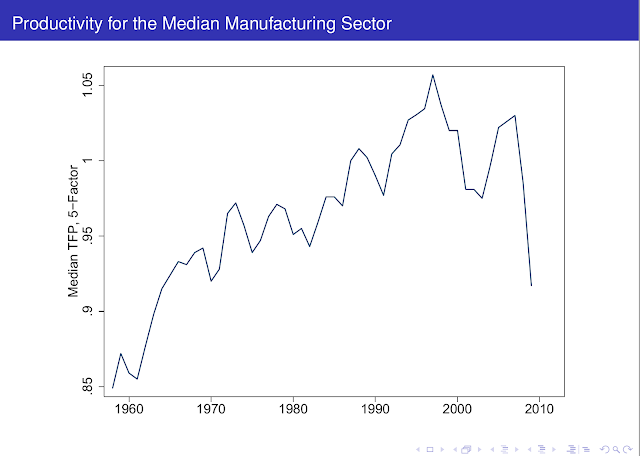

Lawrence says that, even in the period of the China shock, fast productivity growth caused the loss of many more manufacturing jobs than China. But, that's not what I found in my paper. I found instead that productivity growth was normal after 2000, and only a normal number of jobs were lost due to productivity growth after 2000, comparable to any decade before. (Below is the evolution of VA per worker. The growth rate looks pretty constant -- nothing to suggest a very sudden decline in the level of manufacturing employment.)

In addition, productivity for the median manufacturing sector actually declines in the 2000s. This is offset by very fast productivity growth in the top sector, growth which may not have actually happened.

Another hole in the story that after 2000, the main contributor to the decline in manufacturing was fast productivity growth and low demand is that these two factors imply that the US should have had a trade surplus, not a large trade deficit (see the trade balance in blue plotted vs. two measures of real exchange rates, more about the differences between them here). To be sure, I also find slow demand growth after 2000 (particularly for the 2007-2010 period), although, once again, I believe this was caused in part from the collateral damage from trade and the collapse in manufacturing employment.

I was also slightly annoyed that when he cites Autor, Dorn, and Hanson (2013) for 985,000 jobs lost due to China (out of 5 million total) rather than the follow-up paper by Acemoglu, Autor, Dorn, Hanson and Price (2016), which includes a longer time period and arrives at 1.475 million jobs lost due to the China shock and input/output linkages in manufacturing, and 3.1 million jobs overall, but this is a minor quibble. But, even this estimate does not include the exchange rate shock in the early 2000s, or do anything special for China's WTO accession and the MFA agreement in particular, which almost certainly cost the US jobs in the textile sector. Also, it is also computed with quite conservative methodology -- they multiplied their regression coefficients by the R-squared of the regression.

There are also holes in his analysis with the international comparison. Most of the manufacturing jobs lost in Germany after 1990 were in East Germany. Second, the only Asian, or "capital account" country in his basket is Japan, a country that has been stuck in a liquidity trap for a quarter of a century. There's no Korea, Hong Kong, Singapore, Taiwan, or China. He also doesn't look at levels of manufacturing employment.

One particularly egregious error he makes is to show the decline in spending on consumer goods using 2010 as the last year, without realizing that this was the end of the worst recession since the Great Depression! The problem is that consumer durables slump badly in a depression, so his table is mostly picking up cyclical effects. He then remarks, that, surprisingly, as countries are coming out of a recession the share of goods consumption picks up, without any trace of irony.

In any case, the central message of the paper is clear: Robert Lawrence means to let trade off the hook, one way or another. But he builds his case on trickery. And his pro-status quo position is also not likely to be a winning political strategy for the Democrats in the next election -- it's bad economic analysis and bad politics, and, if followed, likely to lead to precisely the outcome Robert Lawrence, and yours truly, want to avoid.

The main issue is that the central argument is against a straw man. He toggles back and forth seamlessly between two related, but different issues with different causes. One is the long-run decline of manufacturing employment as a share of total employment. This long-run decline is, in fact, caused by faster productivity growth so in that he is correct. However, as far as I know, no economists actually dispute this. And when Trump cites China for the declining in US manufacturing, he is talking about the level, not this "as a share of GDP" bullshit. The rather sudden decline in the level of manufacturing employment in the early 2000s is a different issue than the long-run decline in the share. This was caused mostly by trade, and also by slow demand growth (which isn't necessarily unrelated to the trade shock), but it was not caused by productivity growth. At first, it's unclear which of the two Lawrence is talking about, and given that he says many people believe trade is the cause, it makes it sound as though he is talking about the 2nd. However, then he switches back to arguing the first point.

He then plots this figure, which is meant to show that China didn't cause a deviation from the US's long-run trend in its share of US manufacturing employment.

However, as I've discussed before on this blog, I don't think one can infer much about the health of the manufacturing sector based on whether manufacturing employment/total employment is above or below its long-run trend. The reason is that the long-run trend implies negative employment within several decades (see above), which can't happen, so naturally it must flatten out at some point, precisely like agriculture has done. But, there is no clear counterfactual for what the share of manufacturing employment "should" be. Secondly, the slow growth in overall employment after 2000 was likely caused in part by the swift loss of 3 million manufacturing jobs (see my previous post, and here). Detroit lost many of its tradable sector jobs, but then also lost lots of retail and government jobs as revenues dried up. Its share of manufacturing jobs could also well be on its long-run trend. One could then conclude, erroneously, that the loss of tradable-sector jobs in Detroit is not what caused its decline.

Lawrence says that, even in the period of the China shock, fast productivity growth caused the loss of many more manufacturing jobs than China. But, that's not what I found in my paper. I found instead that productivity growth was normal after 2000, and only a normal number of jobs were lost due to productivity growth after 2000, comparable to any decade before. (Below is the evolution of VA per worker. The growth rate looks pretty constant -- nothing to suggest a very sudden decline in the level of manufacturing employment.)

In addition, productivity for the median manufacturing sector actually declines in the 2000s. This is offset by very fast productivity growth in the top sector, growth which may not have actually happened.

Another hole in the story that after 2000, the main contributor to the decline in manufacturing was fast productivity growth and low demand is that these two factors imply that the US should have had a trade surplus, not a large trade deficit (see the trade balance in blue plotted vs. two measures of real exchange rates, more about the differences between them here). To be sure, I also find slow demand growth after 2000 (particularly for the 2007-2010 period), although, once again, I believe this was caused in part from the collateral damage from trade and the collapse in manufacturing employment.

I was also slightly annoyed that when he cites Autor, Dorn, and Hanson (2013) for 985,000 jobs lost due to China (out of 5 million total) rather than the follow-up paper by Acemoglu, Autor, Dorn, Hanson and Price (2016), which includes a longer time period and arrives at 1.475 million jobs lost due to the China shock and input/output linkages in manufacturing, and 3.1 million jobs overall, but this is a minor quibble. But, even this estimate does not include the exchange rate shock in the early 2000s, or do anything special for China's WTO accession and the MFA agreement in particular, which almost certainly cost the US jobs in the textile sector. Also, it is also computed with quite conservative methodology -- they multiplied their regression coefficients by the R-squared of the regression.

There are also holes in his analysis with the international comparison. Most of the manufacturing jobs lost in Germany after 1990 were in East Germany. Second, the only Asian, or "capital account" country in his basket is Japan, a country that has been stuck in a liquidity trap for a quarter of a century. There's no Korea, Hong Kong, Singapore, Taiwan, or China. He also doesn't look at levels of manufacturing employment.

One particularly egregious error he makes is to show the decline in spending on consumer goods using 2010 as the last year, without realizing that this was the end of the worst recession since the Great Depression! The problem is that consumer durables slump badly in a depression, so his table is mostly picking up cyclical effects. He then remarks, that, surprisingly, as countries are coming out of a recession the share of goods consumption picks up, without any trace of irony.

In any case, the central message of the paper is clear: Robert Lawrence means to let trade off the hook, one way or another. But he builds his case on trickery. And his pro-status quo position is also not likely to be a winning political strategy for the Democrats in the next election -- it's bad economic analysis and bad politics, and, if followed, likely to lead to precisely the outcome Robert Lawrence, and yours truly, want to avoid.

Friday, November 10, 2017

Did the Rise of China Help or Harm the US? Let's not forget Basic Macro

This is a question which was posed to me after I presented last week at the Federal Reserve Board in DC. Presenting there was an honor for me, and I got a lot of sharp feedback. It's also getting to the point where I need to start thinking about my upcoming AEA presentation alongside David Autor and Peter Schott, two titans in this field who both deserve a lot of credit for helping to bring careful identification to empirical international trade, and for challenging dogma. After all, before 2011, as far as I know the cause of the "Surprisingly Swift" decline in US manufacturing employment had not been written about in any academic papers. This was despite the fact that the collapse was mostly complete by 2004, and was intuitive to many since it coincided with a large structural trade deficit. (Try to explain that one with your productivity boom and slow demand growth, Robert Lawrence...)

On one hand, there is now mounting evidence that the rise of Chinese manufacturing harmed US sectors which compete with China. This probably also hurt some individual communities and people pretty badly, and might also have triggered an out-migration in those communities. On the other hand, typically the Fed offsets a shock to one set of industries with lower interest rates helping others, while consumers everywhere have benefited from cheaper Chinese goods. Which of these is larger? I can't say I'm sure, but of these shocks mentioned so far, I would probably give a slight edge to the benefit of lower prices and varieties. However, I suspect, even more importantly, Chinese firms have also been innovating, more than they would have absent trade, which means the dynamic gains in the long-run have the potential to be larger than any of these static gains/losses you might try to estimate courageously with a model.

Many (free!) trade economists use the above logic (perhaps minus the dynamic part), and conclude that no policies are needed to help US manufacturing right now. However, I think this view misses 4 other inter-related points, and in addition does not sound to me like a winning policy strategy for the Democrats in 2020. And a losing strategy here means more Trumpian protectionism.

First, when a trade economists' free trade priors lead them to argue that the rise of China was beneficial for the US, they forget that China and the US do not and did not have free trade between them. Just ask Mark Zuckerberg. Or google, or Siemens, or the numerous other companies who have had their intellectual property stolen. (Of course, the Bernie folks also need to remember that it is probably rich Americans who have been hit the worst by China's protectionism -- IP piracy certainly harms Hollywood and Silicon Valley, probably quite badly, while Mark Zuckerberg may be the most harmed individual). It might be that the rise of China was beneficial to the US, but would have been even more beneficial had China not had a massively undervalued exchange rate for much of the past 30 years. If you disagree with this assessment, then you, like Donald Trump, are not exactly carrying the torch of free trade. (What's wrong with having the market set prices, tavarish?)

Another problem with the view that everything is A-OK in trade is that China's surpluses are now reduced, but reduced due to a huge reduction in demand and growth in the US and Europe. That's not a good way to solve imbalances. See diagram below, drawn on assumption that the exchange rate is held fixed: A shift down in US demand (increase in Savings, SI shifts right) left income depressed, but improved the trade balance from CA0 to CA1. However, if US demand increases/savings decline, the US won't go back to the Full Income/Employment and CA(balanced) equilibrium. For that, we'll still need to devalue the exchange rate (shifting up the XM line with a devaluation, it shifts down with an appreciation). As they say, it takes a lot of Harberger triangles to plug an Okun Gap.

Sure, you might object that it is less obvious that China is overvalued now. You might have heard stories about capital wanting to flood out of China, not in. But, this is because the exchange rate is set largely by capital flows and not by the structural trade balance. All this indicates is that monetary policy is relative tight in the US/Europe, not that the structural trade balance is in equilibrium. It isn't.

The third factor that most economists neglect when they think about the rise of China is the issue of hysteresis, defined weakly as "history matters". Clearly, being overvalued for an extended period of time will shrink your tradables sector. Yes, it can also decrease your exchange rate, but it will for sure decrease the equilibrium exchange rate you need to balance your trade. And if your exchange rate is being set in Beijing, or by the ZLB, then you can forget about a quick return to normality.

The chart below shows that US dollar appreciations are followed by a shift up in the relation between the US trade balance and the RER. Undervalued periods (just 1979) is followed by a shift to the southwest. Thus, shifts NWs are shifts in the direction of a shrinking (in relative terms) US tradables sector/income. The trade balance itself won't be hysteretic, as movements NW reduce imports, but the intercept of the exchange rate/trade balance slope will be.

This is all as Krugman foretold in 1988...

To put hysteresis another way, consider the event study diagram below of the 1980s US dollar appreciation (from my paper here). The dollar appreciated 50% (black), and as a result, employment in the more tradable sectors (blue dotted line) fell about 10% relative to the more closed sectors (red line). The interesting thing, though, is that after relative prices returned to fundamentals, employment in the more open sectors came back only slowly, if at all.

The above makes clear that a temporary shock has a persistent impact. But, could it actually lower income? Well, imagine for a second what would happen if the US dollar were very overvalued for a long period of time, say, due to policy. Eventually, it would lose all of its tradable sectors. Then, what would happen to income and the exchange rate when it floated again? Would things go back to normal overnight? Or would it take some time to build up the tradable sector capacity back to where it used to be? In the meantime, you would be likely to have a vastly lower exchange rate, which means you can afford less, which means you are poorer. Also, in an inflation-targeting regime, if a period of overvaluation coincides with a recession, and you shoot for just 2% coming out, then you might never recover your hysteretic tradable-sector losses. Thus, it isn't that free trade is bad, but having an artificially overvalued exchange rate is.

Wait, there's more. You might be thinking -- is there really a Macro effect of a trade shock? Won't the Fed just offset a trade shock by lowering interest rates? In that case, won't a trade shock just alter what gets produced and not how much is produced? For a small shock, the answer is probably yes. But, for a large shock that pushes you close to the zero lower bound, like the 2000-2001 shock did, there is no guarantee. The Fed likely would have responded more aggressively to the 2001 recession if not for the ZLB. Secondly, lower interest rates from the Fed work in part through exchange rate adjustment. If the ZLB comes into play, the exchange rate will be over-appreciated relative to what it needs to be for a full-employment equilibrium. This may imply more tradable-sector job losses. And, if China is pegged to the US, that adjustment won't happen. And, as my students can all tell you, monetary policy becomes ineffective with a fixed exchange rate and open capital market. Sure, the US had a floating rate, but being pegged by China, and essentially by other East Asian economies following the Asian Financial Crisis, leaves you with much the same result.

So, am I then proposing Trumpian protectionist policies? Not necessarily. You see, while US growth is still slow, the US is not at the ZLB any more. The Fed can cut interest rates, and spur growth and weaken the dollar, helping manufacturing. The beauty of this strategy is that it solves multiple problems at once. And, there are certainly specific trade issues that the US could raise with China, in addition to exchange rates. It would, of course, be strange to push on the issue of exchange rates while US interest rates are too high. A higher inflation target would also be a passive way of discouraging China from holding so many dollar reserves. But, I think a possible winning political strategy would be a high nominal GDP target, which will also weaken the dollar, and also campaign on a push to defend US corporate interests in China on specific trade issues, including the exchange rate.

On one hand, there is now mounting evidence that the rise of Chinese manufacturing harmed US sectors which compete with China. This probably also hurt some individual communities and people pretty badly, and might also have triggered an out-migration in those communities. On the other hand, typically the Fed offsets a shock to one set of industries with lower interest rates helping others, while consumers everywhere have benefited from cheaper Chinese goods. Which of these is larger? I can't say I'm sure, but of these shocks mentioned so far, I would probably give a slight edge to the benefit of lower prices and varieties. However, I suspect, even more importantly, Chinese firms have also been innovating, more than they would have absent trade, which means the dynamic gains in the long-run have the potential to be larger than any of these static gains/losses you might try to estimate courageously with a model.

Many (free!) trade economists use the above logic (perhaps minus the dynamic part), and conclude that no policies are needed to help US manufacturing right now. However, I think this view misses 4 other inter-related points, and in addition does not sound to me like a winning policy strategy for the Democrats in 2020. And a losing strategy here means more Trumpian protectionism.

First, when a trade economists' free trade priors lead them to argue that the rise of China was beneficial for the US, they forget that China and the US do not and did not have free trade between them. Just ask Mark Zuckerberg. Or google, or Siemens, or the numerous other companies who have had their intellectual property stolen. (Of course, the Bernie folks also need to remember that it is probably rich Americans who have been hit the worst by China's protectionism -- IP piracy certainly harms Hollywood and Silicon Valley, probably quite badly, while Mark Zuckerberg may be the most harmed individual). It might be that the rise of China was beneficial to the US, but would have been even more beneficial had China not had a massively undervalued exchange rate for much of the past 30 years. If you disagree with this assessment, then you, like Donald Trump, are not exactly carrying the torch of free trade. (What's wrong with having the market set prices, tavarish?)

Another problem with the view that everything is A-OK in trade is that China's surpluses are now reduced, but reduced due to a huge reduction in demand and growth in the US and Europe. That's not a good way to solve imbalances. See diagram below, drawn on assumption that the exchange rate is held fixed: A shift down in US demand (increase in Savings, SI shifts right) left income depressed, but improved the trade balance from CA0 to CA1. However, if US demand increases/savings decline, the US won't go back to the Full Income/Employment and CA(balanced) equilibrium. For that, we'll still need to devalue the exchange rate (shifting up the XM line with a devaluation, it shifts down with an appreciation). As they say, it takes a lot of Harberger triangles to plug an Okun Gap.

Sure, you might object that it is less obvious that China is overvalued now. You might have heard stories about capital wanting to flood out of China, not in. But, this is because the exchange rate is set largely by capital flows and not by the structural trade balance. All this indicates is that monetary policy is relative tight in the US/Europe, not that the structural trade balance is in equilibrium. It isn't.

The third factor that most economists neglect when they think about the rise of China is the issue of hysteresis, defined weakly as "history matters". Clearly, being overvalued for an extended period of time will shrink your tradables sector. Yes, it can also decrease your exchange rate, but it will for sure decrease the equilibrium exchange rate you need to balance your trade. And if your exchange rate is being set in Beijing, or by the ZLB, then you can forget about a quick return to normality.

The chart below shows that US dollar appreciations are followed by a shift up in the relation between the US trade balance and the RER. Undervalued periods (just 1979) is followed by a shift to the southwest. Thus, shifts NWs are shifts in the direction of a shrinking (in relative terms) US tradables sector/income. The trade balance itself won't be hysteretic, as movements NW reduce imports, but the intercept of the exchange rate/trade balance slope will be.

This is all as Krugman foretold in 1988...

To put hysteresis another way, consider the event study diagram below of the 1980s US dollar appreciation (from my paper here). The dollar appreciated 50% (black), and as a result, employment in the more tradable sectors (blue dotted line) fell about 10% relative to the more closed sectors (red line). The interesting thing, though, is that after relative prices returned to fundamentals, employment in the more open sectors came back only slowly, if at all.

The above makes clear that a temporary shock has a persistent impact. But, could it actually lower income? Well, imagine for a second what would happen if the US dollar were very overvalued for a long period of time, say, due to policy. Eventually, it would lose all of its tradable sectors. Then, what would happen to income and the exchange rate when it floated again? Would things go back to normal overnight? Or would it take some time to build up the tradable sector capacity back to where it used to be? In the meantime, you would be likely to have a vastly lower exchange rate, which means you can afford less, which means you are poorer. Also, in an inflation-targeting regime, if a period of overvaluation coincides with a recession, and you shoot for just 2% coming out, then you might never recover your hysteretic tradable-sector losses. Thus, it isn't that free trade is bad, but having an artificially overvalued exchange rate is.

Wait, there's more. You might be thinking -- is there really a Macro effect of a trade shock? Won't the Fed just offset a trade shock by lowering interest rates? In that case, won't a trade shock just alter what gets produced and not how much is produced? For a small shock, the answer is probably yes. But, for a large shock that pushes you close to the zero lower bound, like the 2000-2001 shock did, there is no guarantee. The Fed likely would have responded more aggressively to the 2001 recession if not for the ZLB. Secondly, lower interest rates from the Fed work in part through exchange rate adjustment. If the ZLB comes into play, the exchange rate will be over-appreciated relative to what it needs to be for a full-employment equilibrium. This may imply more tradable-sector job losses. And, if China is pegged to the US, that adjustment won't happen. And, as my students can all tell you, monetary policy becomes ineffective with a fixed exchange rate and open capital market. Sure, the US had a floating rate, but being pegged by China, and essentially by other East Asian economies following the Asian Financial Crisis, leaves you with much the same result.

So, am I then proposing Trumpian protectionist policies? Not necessarily. You see, while US growth is still slow, the US is not at the ZLB any more. The Fed can cut interest rates, and spur growth and weaken the dollar, helping manufacturing. The beauty of this strategy is that it solves multiple problems at once. And, there are certainly specific trade issues that the US could raise with China, in addition to exchange rates. It would, of course, be strange to push on the issue of exchange rates while US interest rates are too high. A higher inflation target would also be a passive way of discouraging China from holding so many dollar reserves. But, I think a possible winning political strategy would be a high nominal GDP target, which will also weaken the dollar, and also campaign on a push to defend US corporate interests in China on specific trade issues, including the exchange rate.

Sunday, November 5, 2017

Ancestry and Development: the Power Pose of Economics?

I was

fortunate to be invited to present at George Mason this week. I was very

impressed with the lively atmosphere of brilliant scholars. George Mason certainly has had an outsized impact on the economics community, and also likely on economic policy in the US given its admirable commitment to participating in the public dialogue.

In any case, they asked me to

present my work joint with Ju Hyun Pyun, taking down the "genetic distance to the US predicts development" research, which Andrew Gelman blogged about here.

It was

the first time I had thought about this research in some time. This has evolved into an Amy Cuddy "Power Pose" situation, in which Spolaore and Wacziarg refuse to admit that there is any problem with their research, and continue to run income-level regressions and write papers using genetic distance which do not include a dummy for sub-Saharan Africa, but exclude that region instead. (For example, they have a paper dated September 2017, "Ancestry and Development: New Evidence", which continues to exclude SSA. Surprise, surprise, they continue not to cite us. Note that here, both I and they are really talking about genetic distance, not ancestry generally...)

In their comments over at Gelman's blog, they also stressed that "our results hold when we exclude Sub-Saharan Africa and so cannot be driven solely by those countries".

I am skeptical of excluding observations which are essentially counterexamples. Within

sub-Saharan Africa, the correlation between genetic distance to the US and

development is slightly positive (Fig. 3 below), rather than negative, so excluding these observations is self-serving. One can also see that there is a significant positive relationship between genetic distance to the US and development for Asia, another counterexample.

Another reason to be skeptical is that if we exclude sub-Saharan Africa and Europe, there is also not significant correlation, although, to be fair to these guys, the sign is correct.

Thus, the remaining question is how robust the genetic distance-development relationship is in Europe. In fact, there is already a paper, by Giuliano,Spilimbergo, and Tonon, saying that the impact of genetic distance on both trade and GDP in Europe is not robust. Note, the early drafts of that paper also said something about GDP in Europe, while the published version stripped out GDP precisely because the referees -- likely Spolaore or Wacziarg -- wouldn't allow it.

I also went back to look at what my referees had said about this paper. Wacziarg has now posted regressions on his website used by this referee, so I gather that he must be the author. Interestingly, Romain had some very choice words for this paper, even though Paula Giuliano is a colleague: “Regarding the paper by Giuliano, Spilimbergo and Tonon, the authors of this paper are clearly referring to an old (2006) version, which contained numerous errors and imprecisions.”

I'm curious, Romain, if you're out there, what kind of "errors and imprecisions" this paper by your colleague had.

In any case, I decided to check if Giuliano, Spilimbergo, and Tonon were really that careless, or whether Spolaore and Wacziarg were, once again, wrong.

Thus, on the metro ride from Dupont Circle to George Mason, I fired up Stata to check how robust the results were when we exclude sub-Saharan Africa. Admittedly, it took me several regressions (see below) to get this correlation to disappear. The key control was a dummy for former communist countries, or controlling for Eastern and Western Europe separately. In each of the regressions below, I've excluded sub-Saharan Africa. In column (1), I include controls for absolute latitude and a dummy for Europe. In column (2), I include a dummy for Western Europe instead (excluding former communist countries). In column (3), I include dummies for former Soviet Union countries (FSU) separately, and, unfortunately, the results are no longer significant at 95%. In column (5), I also add in "Percentage of land area in the tropics and subtropics", and now the coefficient on genetic distance falls to -3.7, but with standard errors of 3.6.

Hence, it would seem that genetic distance to the US is not a good predictor of income levels, even if you exclude SSA, which are counter-examples. Only caveat here is that I spent about 30 minutes coding this up while extremely jet-lagged.

It's amazing to me that these two Harvard PhDs would want to continue to push this, and to stake their reputations on this. To me, there are a lot of ways I would like to spend both my research time, and my free time. Even if I was them, I don't see why continuing with this project appeals to them so much. They now have written an additional 5-6 papers, it seems, repeating the same mistakes, even after they became aware that their results are not robust. The answer must be that now they perceive themselves to be in a life-or-death situation in which their reputations are at stake. They really need this correlation they discovered to be robust. And so they continue to churn out papers using this measure. In fact, I suspect no one really cares. That's why it's surprising they haven't moved on.

Another thing which is strange about their new paper, is that in the comments over at Gelman, they said that their paper was mainly about the country-difference pair regressions. I showed that these results, too, are not robust once one separates out poor sub-Saharan Africa from richer North Africa, and includes a full set of continent fixed effects. I should add that Spolaore and Wacziarg claim that when they run these same regressions, their results are robust. However, they won't provide us with their data or regressions to check. Nevertheless, in their new paper, they've gone back to the cross-country income regressions, which they previously conceded were not robust. I guess they were hoping that their comments over at Gelman's blog (and at Marginal Revolution) would be forgotten.

In any case, if Spolaore and Wacziarg want to respond with more gibberish, I'll yield to them the floor. I do wonder what kind of evidence they would want to see that would convince them that there is nothing here. Figures (3) and (4) are already pretty damning, not to mention the table after it. I'm sure they'll continue to be as defiant as ever, which should provide some comic relief for the rest of us.

Another thing which is strange about their new paper, is that in the comments over at Gelman, they said that their paper was mainly about the country-difference pair regressions. I showed that these results, too, are not robust once one separates out poor sub-Saharan Africa from richer North Africa, and includes a full set of continent fixed effects. I should add that Spolaore and Wacziarg claim that when they run these same regressions, their results are robust. However, they won't provide us with their data or regressions to check. Nevertheless, in their new paper, they've gone back to the cross-country income regressions, which they previously conceded were not robust. I guess they were hoping that their comments over at Gelman's blog (and at Marginal Revolution) would be forgotten.

In any case, if Spolaore and Wacziarg want to respond with more gibberish, I'll yield to them the floor. I do wonder what kind of evidence they would want to see that would convince them that there is nothing here. Figures (3) and (4) are already pretty damning, not to mention the table after it. I'm sure they'll continue to be as defiant as ever, which should provide some comic relief for the rest of us.

Update: I have put in another request to Wacziarg and Spolaore for their data. I wouldn't hold your breath. I'd be willing to bet my life savings that they will not provide their data and code. They do provide their new genetic distance data, presumably so that other people can use it and cite them. I downloaded this data to test robustness. Stay tuned for results!

Update 2: Someone writes in, directing me to a link to the debunked genopolitics work on whether there is a "voting gene".

Wednesday, August 16, 2017

Alfred Crosby Deserves a Nobel Prize in Economics...

for his absolutely brilliant insights into Economic History and Development. Arguably, he should share it with Jared Diamond, who really succeeded in popularizing Crosby's ideas and also added quite a bit himself. Although some might say that he could have cited Crosby a bit more than he did.

For it was Crosby, a historian by trade who decided he would look into the little-researched field of ecological history. Any grad student in Economic History/Development, or, scratch that, any grad student in economics, should go read at least one of Ecological Imperialism or The Columbian Exchange. The latter term, coined by Crosby, highlights the very unequal exchange of diseases and technologies between peoples of the old world and new. Europe got potatoes, corn, tomatoes, chocolate, and plastic. Native Americans got grains, livestock, bacon, and a whole host of vicious diseases which leveled their populations. The only disease to come from the Americas was perhaps syphilis, which is still debated.

Suppose you wanted to believe that Europeans had better institutions, and thus colonized the Americas rather than vice-versa. However, Crosby tells the story of when the first American settlers coming over the Appalachian mountains arrived in Kentucky, and thought that Kentucky blue-grass was a native plant. In fact, Crosby notes that Kentucky bluegrass actually is native to France. It arrived in the Americas aboard a ship, and just happened to colonize the continent even faster than humans. The same was true of European dogs, mice, rabbits, and bees. It would be bizarre to argue that rabbits had better property rights institutions, and thus colonized the Americas. And, given that human's colonization of the Americas was not unique, it seems wrong to focus on unique aspects of humans. Weeds, bugs, and various other animals pulled the same feat.

Here's an except from a Crosby essay:

Europeans in North America, especially those with an interest in gardening and botany, are often stricken with fits of homesickness at the sight of certain plants which, like themselves, have somehow strayed thousands of miles eastward across the Atlantic. Vladimir Nabokov, the Russian exile, had such an experience on the mountain slopes of Oregon:

Do you recognize that clover?

Dandelions, l’or du pauvre?

(Europe, nonetheless, is over.)

A century earlier the success of European weeds in American inspired Charles Darwin to goad the American botanist Asa Gray: “Does it not hurt your Yankee pride that we thrash you so confoundly? I am sure Mrs. Gray will stick up for your own weeds. Ask her whether they are not more honest, downright good sort of weeds.”

Why was the Columbian Exchange so unequal? Essentially it comes down to the large Eurasian landmass being a far more competitive ecosystem than the north-to-south oriented Americas (admittedly, Diamond deserves credit for noticing the difference in the axes of the continents). Any type of grass in the large Eurasian landmass that adopted a new, useful trait would come to dominate the entire continent, as much of it shared similar climate. By contrast, if a type of grass in New York has some new beneficial trait, it will be unlikely to spread to Mexico or northern Canada given the vastly different climates there. Thus, evolution would play out a little faster in Eurasia, as would technological progress among human societies. On the other hand, the distribution of useful large domesticable animals was probably just random. And this spurred the pandemic diseases.

Crosby notes that most European settlers ended up dominating the "Neo-Europes" -- areas of the world similar to Europe in terms of climate -- but much less so in the tropics. Many economists believe that institutions are the reasons the tropics are poor, or genetics. However, Crosby lays out another reason:

The reasons for the relative failure of the European demographic takeover in the tropics are clear. In tropical Africa, until recently, Europeans died in droves of the fevers; in tropical America they died almost as fast of the same diseases, plus a few native American additions. Furthermore, in neither region did European agricultural techniques, crops, and animals prosper. Europeans did try to found colonies for settlement, rather than merely exploitation, but they failed or achieved only partial success in the hot lands, The Scots left their bones as monument to their short-lived colony at Darien at the turn of the eighteenth century. The English Puritans who skipped Massachusetts Bay Colony to go to Providence Island in the Caribbean Sea did not even achieve a permanent settlement, much less a Commonwealth of God. The Portuguese who went to northeastern Brazil created viable settlements, but only by perching themselves on top of first a population of native Indian laborers and then, when these faded away, a population of laborers imported from Africa, They did achieve a demographic takeover, but only by interbreeding with their servants. The Portuguese in Angola, who helped supply those servants, never had a breath of a chance to achieve a demographic takeover. There was much to repel and little to attract the mass of Europeans to the tropics, and so they stayed home or went to the lands where life was healthier, labor more rewarding, and where white immigrants, by their very number, encouraged more immigration.

One of the big problems with both Acemoglu, Johnson, and Robinson's (AJR) work on development, and also with Spolaore and Wacziarg's QJE paper on genetic distance, is that they hadn't actually read, or properly internalized, the teachings of Crosby and Diamond. AJR argued that disease climate was a proxy for institutions and not geography, whereas clearly one might make a case that it's also a good proxy for climatic similarity. Areas of the world where European peoples died are also areas of the world where European crops and cattle also died. This was an insight that AJR missed. If Acemoglu got a John Bate's Clark for his work on institutions, then Crosby certainly deserves a Nobel.

Of course, there's more here. The insights of Crosby/Diamond don't end in 1500. First, history casts long shadows. But, aside from that, the world was largely agrarian even long after the Industrial Revolution in 1800. In Malthusian societies, agricultural technologies are very important. If you are a farmer in Angola, those new varieties of wheat, and farming technologies discovered in the American midwest in 1900, or even 1950, are not going to help you. If you live in Australia, the Southern Cone countries, or Europe, they will.

One thing that is fascinating is that, while the US is similar to Europe climatically, it's not 1-for-1. The US is much hotter and more humid in summer. The US south actually does share some similarities with Africa. And it was in the US where air-conditioning was invented. This technology almost certainly was more helpful than the tropics. (Note: I haven't turned on my AC all summer in Moscow, Russia!...) But, again, I see this as a Crosby/Diamond type of affect.

In any case, here's a nice interview of Crosby. Here's a short reading you can give to students. Here's Crosby's wikipedia page.

Alfred Crosby is now 86 years old! He deserves a real Nobel, much less the fake one awarded in Economics, and he deserves it sooner rather than later.

Wednesday, August 9, 2017

Ranking Academic Economic Journals by Speed

Before, I shared some thoughts for improving academic journals. One of my complaints is about how long it takes. So, I decided to make a new ranking based on how long journals take to respond using ejmr data (among the top 50 journals ranked by citations, discounted recursive, last 10 years, although taken from last month). In the table below, # is the a journals rank by citations, and then there is data on acceptance rate, desk rejection rate, average time to first response (how the data is sorted), and then the 25th and 75th percentiles. The sample size is the last column.

Not surprisingly, the QJE, who desk rejects 62% of papers, is the fastest, with an average time of little more than two weeks. JEEA, with a 56% desk rejection rate, is up next, followed by JHR, with a 58% rejection rate. Finance journals, who often pay for quick referee reports, tend to be fast, while Macro and econometric journals tend to be the slowest. The JME, which is notorious, clocks in at an average of 7.7 months, with a median of 4 months, but a 75th percentile of 9 months. On the other hand, the acceptance rate for those who report their submission results at ejmr is 21%, and they do not desk reject. It might be worth doing a separate ranking for those papers that actually go out to referees. In any case, among top 5 journals, the JPE is the worst, clocking in at 4.8 months on average.

Of course, when submitting, it's still journal quality/citations that matter most. But review times in excess of 6 to 9 months can be career killers. Probably, it's the right tails which are the most important here, which is why I sorted by average rather than median.

| # | Journal Name | Accept % | Desk Reject % | Avg. Time | Median Time | 25th Percent. (Months) | 75th Percent. (Months) | N = |

| 1 | Quarterly Journal of Economics | 1% | 62% | 0.6 | 0 | 0 | 1 | 71 |

| 12 | Journal of the European Economic Association | 4% | 56% | 1.2 | 0.5 | 0 | 2 | 25 |

| 14 | Journal of Human Resources | 18% | 58% | 1.3 | 1 | 0 | 2 | 38 |

| 6 | American Economic Journal: Applied Economics | 3% | 33% | 1.6 | 2 | 0 | 2.5 | 36 |

| 31 | European Economic Review | 29% | 50% | 1.6 | 1 | 0 | 3 | 34 |

| 15 | Review of Financial Studies | 15% | 20% | 1.8 | 2 | 1 | 2 | 20 |